Background

A lot has changed about how we built microservices, and most people have no choice but to deploy microservices as pods in Kubernetes. Let us take an example where we are running a web application that queries data for the products. If both of these functionalities were on the webserver, then a calculation could slow the response time of the web pages. Having it on a seperate server avoids this problem.

What is a service mesh?

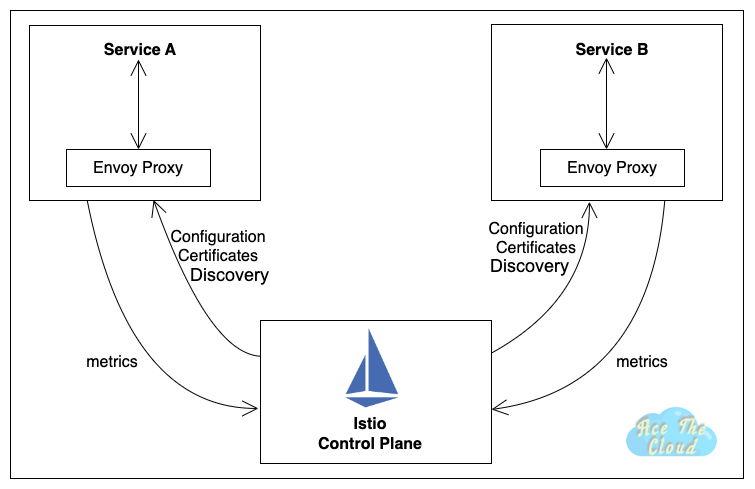

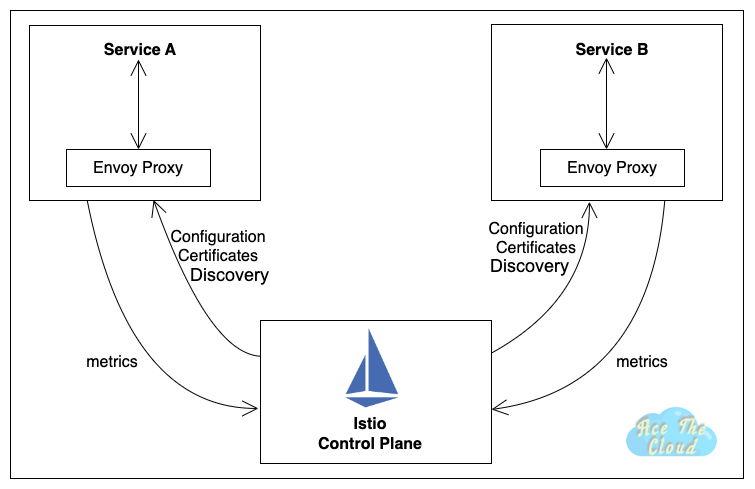

A service mesh is a layer of infrastructure that sits between the microservices in a system and the network, and helps to manage and control the communication between those services. A service mesh typically consists of a network of proxies (also known as “sidecars”) that are deployed alongside the microservices and act as intermediaries for all communication between the services. The proxies handle tasks such as routing traffic, enforcing policies, and providing observability and monitoring.

One of the main advantages of a service mesh is that it allows teams to manage the communication between microservices at a global level, rather than having to handle these tasks on a per-service basis. This can make it easier to implement and enforce policies and patterns across the entire system, and can improve the reliability and performance of the microservices architecture as a whole.

What is an API gateway?

An API gateway is a reverse proxy that sits in front of a group of microservices and acts as a single entry point for external requests. The API gateway routes requests to the appropriate microservice, and can also handle tasks such as load balancing, authentication, and rate limiting.

One of the main advantages of an API gateway is that it can help to decouple the microservices from external clients, allowing teams to make changes to the microservices without affecting the clients. An API gateway can also help to improve the performance of the microservices architecture by offloading tasks such as authentication and rate limiting to the gateway.

Why Service Mesh is better

When it comes to building and managing a microservices architecture, there are a number of tools and technologies that can help. Two of the most popular options are service mesh and API gateway. But what exactly are these technologies, and how do you choose the right one for your organization?

Service mesh can act as a perfect load balancer and is also one of the core feature. It can be easily configured to divide traffic. Let’s take an example to understand this. Consider you are running an application on multiple clusters. One of devs has added a new feature to the core application and wants to test it out on production with live traffic. The easiest deployment strategy that he/she can test out his latest feature is by pushing it into a container or pod inside the cluster and then diverting a portion of the over traffic to collect essential logs and metrics.

The service mesh sidecar is also designed to collect APM and logs that he can use for performing his analysis on the canary instance. These logs and metrics are collected using a tool like Splunk or Datadog. But With the help of OpsMx Autopilot, one can automate the whole process of testing out a deployment using a canary analysis and perform automatic rollbacks whenever necessary.

It provides critical observability, reliability, and security features. As this runs on a platform level, it is not a burden on the core business application. It is a dominant feature that alleviates the developers crucial time so that they can focus on value-added activities .

Service mesh is an essential management system that helps all the different pods to work in harmony. Here are several reasons why, you will want to implement service mesh in a Kubernetes environment.

Why you should use a service mesh with microservices

It supports microservices architecture and application deployments, along with the other benefits it brings to an organization.

◼ Ensures high availability and fault tolerance. ◼ Gain greater visibility. ◼ Maintain secure communications. ◼ Increase release flexibility.

Istio

Istio extends Kubernetes to establish a programmable, application-aware network using the powerful Envoy service proxy. Working with both Kubernetes and traditional workloads, Istio brings standard, universal traffic management, telemetry, and security to complex deployments.

Trafic Management through Istio

Istio’s traffic routing rules let you easily control the flow of traffic and API calls between services. Istio simplifies configuration of service-level properties like circuit breakers, timeouts, and retries, and makes it easy to set up important tasks like A/B testing, canary rollouts, and staged rollouts with percentage-based traffic splits. It also provides out-of-box reliability features that help make your application more resilient against failures of dependent services or the network.

Security

Breaking down a monolithic application into atomic services offers various benefits, including better agility, better scalability and better ability to reuse services. However, microservices also have particular security needs:

◼ To defend against man-in-the-middle attacks, they need traffic encryption. ◼ To provide flexible service access control, they need mutual TLS and fine-grained access policies. ◼ To determine who did what at what time, they need auditing tools.

Istio Security provides a comprehensive security solution to solve these issues. This page gives an overview on how you can use Istio security features to secure your services, wherever you run them. In particular, Istio security mitigates both insider and external threats against your data, endpoints, communication, and platform.

Observability

Istio generates detailed telemetry for all service communications within a mesh. This telemetry provides observability of service behavior, empowering operators to troubleshoot, maintain, and optimize their applications – without imposing any additional burdens on service developers. Through Istio, operators gain a thorough understanding of how monitored services are interacting, both with other services and with the Istio components themselves.

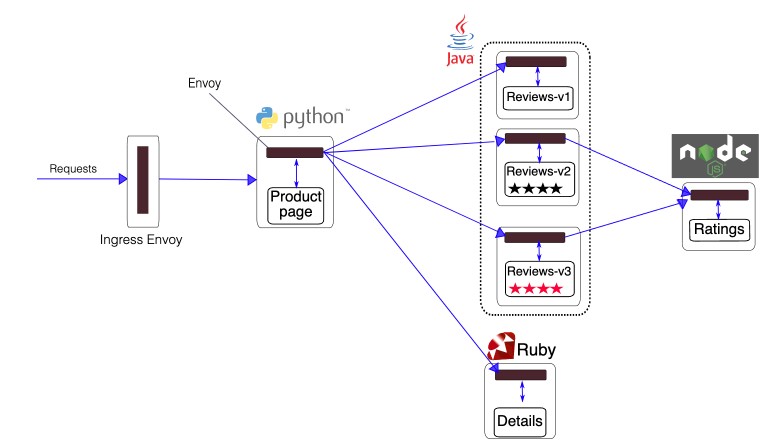

Sample Microservice based application using ISTIO

Implementation in Go

A service mesh is a network of microservices that communicate with each other to form a larger application. It is designed to manage communication between microservices, providing features like load balancing, service discovery, and security.

In Go, you can use the Go Micro library to implement a service mesh. Here is an example of how to set up a service mesh using Go Micro:

First, install Go Micro and its dependencies:

| |

Then, create a new Go Micro service by using the micro new command:

| |

This will create a new Go project with a basic service template. You can then add your service logic to the main.go file.

To start the service mesh, run the following command:

| |

Inject the Istio sidecar into your microservice by running the following command

| |

Use the Istio APIs and libraries to manage communication between your microservices. For example, you can use the Istio Go client to make RPC calls between services:

| |

Configure Ingress and Egress in Service Mesh

One way to configure ingress and egress using a service mesh is to use an ingress controller and an egress controller.

An ingress controller is a piece of software that runs inside the service mesh and manages incoming traffic to the mesh. It is responsible for routing incoming traffic to the appropriate service within the mesh based on the hostname and path specified in the incoming request.

To configure ingress using a service mesh, you will need to:

Deploy an ingress controller within the mesh. This can be done using a sidecar proxy, or by deploying a standalone ingress controller.

Create a virtual service within the mesh that specifies the hostname and path that the ingress controller should route traffic to.

Create a service within the mesh that specifies the backend service that the ingress controller should route traffic to.

Egress controllers work in a similar way, but they are responsible for managing outgoing traffic from the mesh. To configure egress using a service mesh, you will need to:

Deploy an egress controller within the mesh.

Create a virtual service within the mesh that specifies the hostname and path that the egress controller should route traffic to.

Create a service within the mesh that specifies the backend service that the egress controller should route traffic to.

It is important to note that the specific steps for configuring ingress and egress using a service mesh will depend on the specific service mesh implementation you are using. Consult the documentation for your chosen service mesh for more detailed instructions.

Read more about Book Info app here at official website of Istio