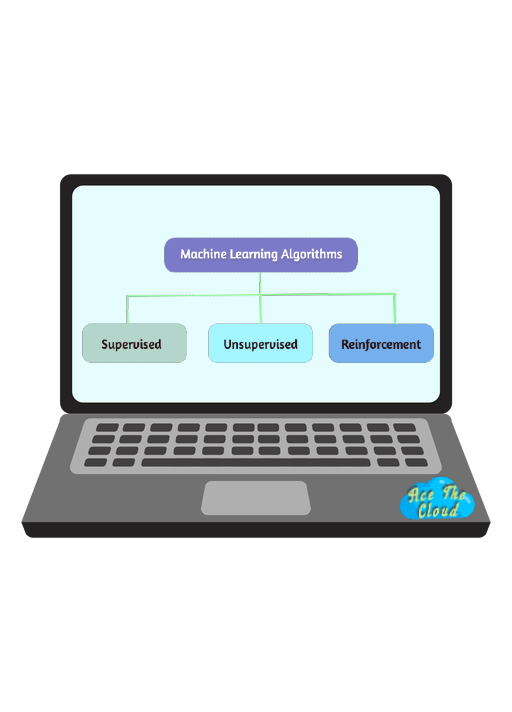

Machine learning algorithms are a set of techniques that allow computers to learn from data and make predictions or decisions without explicit programming. These algorithms can be broadly classified into three categories: supervised learning, unsupervised learning, and reinforcement learning.

Categories of Machine Learning Algorithms

1. Supervised learning algorithms:

Supervised learning algorithms are used when we have a dataset with labeled input and output examples. The goal of these algorithms is to learn a function that can map the input data to the corresponding output labels. Some examples of supervised learning algorithms are:

Linear regression: This algorithm is used to predict a continuous outcome variable based on one or more predictor variables. It assumes a linear relationship between the predictor and the outcome variables.

Logistic regression : This algorithm is used to predict a binary outcome variable based on one or more predictor variables. It is a generalized linear model that uses the sigmoid function to model the probability of the outcome variable being 1.

Decision trees : This algorithm is used to build a tree-like model that can be used for classification or regression tasks. The model makes predictions by following the branches of the tree based on the values of the input data.

Support vector machines (SVMs) : This algorithm is used for classification tasks and tries to find the hyperplane in a high-dimensional space that maximally separates the two classes.

2. Unsupervised learning algorithms:

Unsupervised learning algorithms are used when we have a dataset with only input examples and no corresponding output labels. The goal of these algorithms is to find patterns or structures in the data. Some examples of unsupervised learning algorithms are:

K-means clustering : This algorithm is used to partition the data into k clusters based on the similarity of the data points. It does this by iteratively updating the centroids of the clusters until convergence.

Hierarchical clustering : This algorithm is used to build a tree-like model that represents the relationships between the data points. It does this by iteratively merging the most similar data points into a cluster.

Principal component analysis (PCA) : This algorithm is used to reduce the dimensionality of the data by projecting it onto a lower-dimensional space. It does this by finding the directions in the data with the highest variance and projecting the data onto these directions.

3. Reinforcement learning algorithms:

Reinforcement learning algorithms are used to train an agent to take actions in an environment to maximize a reward signal. These algorithms are often used in robotics, self-driving cars, and game playing. Some examples of reinforcement learning algorithms are:

Q-learning : This algorithm is used to learn the optimal action-selection policy in a Markov decision process. It does this by iteratively updating a Q-table that represents the expected reward for each action in each state.

Deep Q-network (DQN) : This algorithm is an extension of Q-learning that uses a neural network to approximate the Q-function. It has been used to achieve human-level performance in various Atari games.

In summary, the choice of a machine learning algorithm depends on the type of data you have, the type of task you want to perform, and the resources you have available. It is important to understand the characteristics and limitations of each algorithm to make an informed decision about which one to use.

Hands On with Supervised learning category of Algorithms

1. Linear Regression.

Linear regression is a machine learning algorithm that is used for predicting a continuous target variable based on one or more explanatory variables. It is based on the idea of finding a linear relationship between the explanatory variables and the target variable.

Here is an example of linear regression in Python using the scikit-learn library:

| |

In this example, we have a sample dataset with 5 data points, each with a single explanatory variable (X) and a target variable (y). We create a linear regression model and fit it to the data using the fit method. Then, we use the predict method to make a prediction for a new data point with an explanatory variable of 6.

The linear regression model learns the linear relationship between the explanatory and target variables by minimizing the sum of the squared errors between the predicted and actual values. The model parameters, such as the intercept and coefficients, are learned during the training process.

In summary, linear regression is a machine learning algorithm that is used for predicting a continuous target variable based on one or more explanatory variables. It is based on the idea of finding a linear relationship between the variables and can be implemented in Python using the scikit-learn library.

2. Logistic Regression

Logistic regression is a type of classification algorithm that is used to predict a binary outcome, such as “yes” or “no,” “0” or “1,” or “true” or “false.” It is based on the logistic function, which maps a continuous input to a value between 0 and 1.

Here is an example of how to implement logistic regression in Python using the scikit-learn library:

| |

In this code, the first step is to import the necessary libraries. Then, the data is loaded and split into training and testing sets. Next, the logistic regression model is created and trained on the training data using the fit function. The model is then used to make predictions on the testing data using the predict function. Finally, the model performance is evaluated using the score function, which returns the accuracy of the predictions.

Logistic regression is a simple and effective algorithm that can be used for a wide range of binary classification tasks. It is fast to train and easy to interpret, but it can be sensitive to the choice of the regularization parameter and may not perform well when there are non-linear relationships in the data.

3. Decision Trees.

Decision trees are a type of machine learning algorithm that can be used for both classification and regression tasks. They are based on the idea of creating a tree-like model of decisions, with each internal node representing a decision based on the value of a feature, and each leaf node representing the prediction.

Here is a simple example of how to train a decision tree classifier in Python using the scikit-learn library:

| |

In the above example, we first load the data and split it into training and testing sets using the train_test_split function from scikit-learn. Then, we create a DecisionTreeClassifier object and train it on the training data using the fit function. Finally, we test the classifier on the testing data and calculate the accuracy using the accuracy_score function.

Decision trees have several hyperparameters that can be tuned to improve the performance of the model, such as the maximum depth of the tree, the minimum number of samples required to split a node, and the criterion used to split the nodes. These hyperparameters can be set when creating the DecisionTreeClassifier object, as shown below:

| |

In this example, we set the maximum depth of the tree to 5, the minimum number of samples required to split a node to 10, and the criterion used to split the nodes to gini.

Decision trees are simple and easy to implement, and they can handle both numerical and categorical data. However, they can be prone to overfitting, especially if the tree is allowed to grow too deep. To prevent overfitting, it is recommended to use techniques such as pruning or setting a maximum depth for the tree.

- Support vector machines (SVMs):

Support vector machines (SVMs) are a type of machine learning algorithm that can be used for both classification and regression tasks. They are based on the idea of finding a hyperplane in the feature space that maximally separates the different classes or values.

Here is a simple example of how to train a linear SVM classifier in Python using the scikit-learn library:

| |

In the above example, we first load the data and split it into training and testing sets using the train_test_split function from scikit-learn. Then, we create an SVC object with a linear kernel and train it on the training data using the fit function. Finally, we test the classifier on the testing data and calculate the accuracy using the accuracy_score function.

SVMs have several hyperparameters that can be tuned to improve the performance of the model, such as the kernel type, the regularization parameter, and the kernel-specific parameters. These hyperparameters can be set when creating the SVC object, as shown below:

| |

In this example, we set the kernel type to RBF (radial basis function), the regularization parameter to 1.0, and the kernel-specific parameter to 0.1.

SVMs are powerful and effective machine learning algorithms, and they have been widely used in many applications. However, they can be sensitive to the choice of the hyperparameters and may require careful tuning to achieve good performance. They can also be computationally expensive to train, especially for large datasets.

Hands On with Unsupervised learning category of Algorithms

1. K-means clustering

K-means clustering is an unsupervised machine learning algorithm that is used to group data points into “k” clusters based on their similarity. It is based on the idea of iteratively assigning data points to the closest cluster center and then updating the cluster centers to the mean of the data points in the cluster.

Here is a simple example of how to use the k-means clustering algorithm in Python using the scikit-learn library:

| |

In the above example, we first load the data and create a KMeans object with the desired number of clusters (in this case, 3). Then, we fit the data to the model using the fit function, and predict the cluster labels for the data using the predict function.

K-means clustering has several hyperparameters that can be tuned to improve the performance of the model, such as the number of clusters (k), the initialization method, and the maximum number of iterations. These hyperparameters can be set when creating the KMeans object, as shown below:

| |

In this example, we set the number of clusters to 5, the initialization method to ‘k-means++’, and the maximum number of iterations to 300.

K-means clustering is a fast and effective algorithm that is widely used for clustering tasks. However, it can be sensitive to the initial conditions and may not always produce the best clusters. It is also important to choose an appropriate value for the number of clusters (k), as the performance of the algorithm can depend on it.

2. Hierarchical clustering

Hierarchical clustering is a machine learning technique that is used to group data points into clusters based on their similarity. It is an unsupervised learning method that does not require labeled data.

In hierarchical clustering, the data points are initially considered as individual clusters, and then they are merged into larger clusters based on their similarity. There are two main types of hierarchical clustering: agglomerative and divisive. Agglomerative hierarchical clustering starts with the individual data points and merges them into larger clusters, while divisive hierarchical clustering starts with a single cluster and divides it into smaller clusters.

Here is a simple example of how to perform agglomerative hierarchical clustering in Python using the scikit-learn library:

| |

In the above example, we first load the data and create an AgglomerativeClustering object with the desired number of clusters. Then, we fit the model to the data using the fit function, and predict the clusters for each data point using the fit_predict function.

Hierarchical clustering has several hyperparameters that can be tuned to achieve the best performance for the given task, such as the number of clusters, the distance measure, and the linkage criterion. These hyperparameters can be set when creating the AgglomerativeClustering object, as shown below:

| |

In this example, we set the number of clusters to 5, the distance measure to euclidean, and the linkage criterion to ward.

Hierarchical clustering is a simple and effective technique that can be used for a wide range of tasks. However, it can be sensitive to the choice of the distance measure and the linkage criterion, and may not always produce meaningful clusters. It is also computationally expensive for large datasets.

3. Principal component analysis (PCA)

Principal component analysis (PCA) is a machine learning technique that is used for dimensionality reduction and data visualization. It is based on the idea of projecting the data onto a lower-dimensional space, such that the new features are orthogonal (uncorrelated) and capture the most variation in the data.

Here is a simple example of how to perform PCA in Python using the scikit-learn library:

| |

In the above example, we first load the data and create a PCA object with the desired number of components (in this case, 2). Then, we fit the model to the data using the fit function, and transform the data to the new space using the transform function. The transformed data can then be plotted or used for further analysis.

PCA has several hyperparameters that can be tuned to adjust the behavior of the algorithm, such as the number of components to keep and the method used to compute the principal components. These hyperparameters can be set when creating the PCA object, as shown below:

| |

In this example, we set the number of components to 0.95, which means that the model will keep enough components to retain 95% of the variance in the data. We also set the svd_solver to ‘full’, which specifies the method used to compute the principal components.

PCA is a fast and effective method for dimensionality reduction and data visualization. However, it can be sensitive to the scaling of the data, and it does not preserve the structure of the original data. It is also important to keep in mind that the principal components are sensitive to the choice of the origin and the direction of the axes, and may not always correspond to meaningful features in the data.

Hands On with Reinforcement learning category of Algorithms

1. Q-learning

Q-learning is a type of reinforcement learning algorithm that is used to learn the optimal action to take in a given state. It is based on the idea of creating a “Q-table” that stores the expected reward for taking a particular action in a given state. The Q-table is updated iteratively as the agent interacts with the environment and receives rewards.

Here is a simple example of how to implement a Q-learning algorithm in Python:

| |

In the above example, we first initialize the Q-table with zeros and set the discount factor, learning rate, and number of iterations. Then, we loop over the number of iterations and select a random state, select an action based on the current state and the Q-table, take the action and observe the reward and next state, and update the Q-table using the observed reward and the maximum expected reward for the next state. Finally, we use the Q-table to make predictions by selecting the action with the maximum expected reward for each state.

Q-learning is a powerful reinforcement learning algorithm that can be used to solve a wide range of problems. However, it can be computationally expensive and may require fine-tuning of the hyperparameters, such as the learning rate and the discount factor, to achieve good performance.

2. Deep Q-network

Deep Q-network (DQN) is a type of reinforcement learning algorithm that is used to learn a policy for choosing actions in an environment in order to maximize a reward. It is based on the idea of using a neural network to approximate the action-value function (Q-function), which represents the expected long-term reward for taking a specific action in a specific state.

Here is a simple example of how to implement a DQN in Python using the keras library:

| |

In the above code, we first predict the Q-values of the next states using the model. Then, we set the Q-values of the terminal states to 0. This is because there is no future reward for the terminal states. Next, we calculate the targets for each state-action pair using the rewards and the Q-values of the next states.

Then, we predict the Q-values of the current states using the model. Finally, we update the Q-values of the actions taken in the batch using the targets, and train the model on the states and updated Q-values using the fit function.

This is a simplified version of a DQN implementation, and there are several additional techniques that can be used to improve the performance, such as using a target network to stabilize the training, using a replay buffer to break the temporal correlations in the data, and using a prioritized replay buffer to focus on the most important experiences.

In summary, DQN is a type of reinforcement learning algorithm that is used to learn a policy for choosing actions in an environment in order to maximize a reward. It is based on the idea of using a neural network to approximate the action-value function and using the Bellman equation to update the Q-values of the actions taken. There are several techniques that can be used to improve the performance of a DQN, such as using a target network, a replay buffer, and a prioritized replay buffer.