Background

Artificial intelligence (AI) has the potential to revolutionize many industries and transform the way we live and work. However, as AI systems become more complex and sophisticated, it can be challenging to understand how they are making decisions and why they are making those decisions. This lack of transparency can be a significant barrier to the adoption of AI, as it can make it difficult for organizations to trust and rely on these systems.

Enter explainable AI (XAI), a set of techniques and technologies that help make AI systems more transparent and interpretable. XAI techniques allow users to understand how an AI system is making decisions and why it is making those decisions, which can help organizations build trust in their AI systems and make more informed decisions about how to use them.

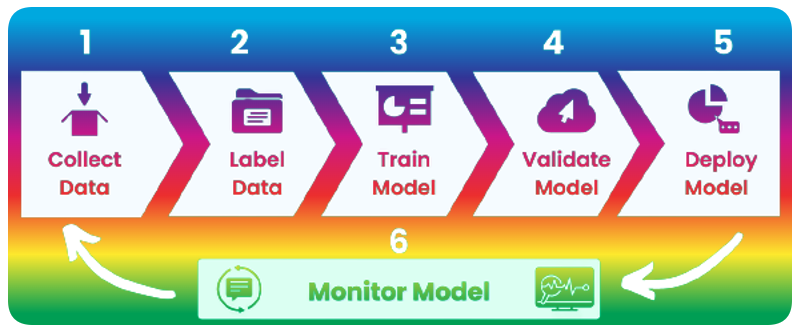

In addition to XAI, another key tool for improving AI performance and transparency is Model Monitoring. Model monitoring is the process of monitoring the performance and accuracy of machine learning models over time. It helps organizations ensure that their models are performing as expected and delivering accurate results, and can help identify areas where models are underperforming or experiencing drift.

By combining XAI techniques with model monitoring, organizations can turn their AI systems from “black boxes” into “glass boxes,” giving them a deeper understanding of how their models are functioning and how they can be improved. Here are some key benefits of using XAI and model monitoring together:

There is a strong relationship between Explainable AI and Model Monitoring, as both are concerned with understanding and improving the performance and accuracy of AI systems. Explainable AI techniques can help organizations understand how their models are making decisions, while model monitoring can help them track the performance and accuracy of those models over time.

By combining explainable AI techniques with model monitoring, organizations can gain a deeper understanding of how their models are functioning and how they can be improved. For example, they can use explainable AI techniques to identify areas where their models are making decisions that are hard to interpret, and then use model monitoring to track the performance and accuracy of those models over time to see if those decisions are impacting their overall performance.

Model Monitoring

Model monitoring is the process of monitoring the performance and accuracy of machine learning models over time. It is an important aspect of machine learning operations (MLOps) and helps organizations ensure that their models are performing as expected and delivering accurate results.

There are several cost benefits of model monitoring, including:

- Early detection of model degradation:

By continuously monitoring the performance and accuracy of your models, you can detect any degradation or drift in performance early on. This allows you to take corrective action before the model becomes completely unusable, which can save time and resources.

- Improved model performance:

By monitoring the performance of your models, you can identify areas where they are underperforming and take steps to improve their performance. This can help you get more value from your models and improve the ROI of your machine learning investments.

- Reduced cost of model maintenance:

By continuously monitoring your models, you can identify when they need to be updated or retrained, and take action to do so in a timely manner. This can help you avoid the cost of maintaining outdated or inaccurate models.

- Increased model usage:

By monitoring the performance of your models, you can identify when they are not being used as much as they could be and take steps to increase their usage. This can help you get more value from your models and increase the ROI of your machine learning investments.

Overall, model monitoring can help organizations improve the performance and accuracy of their models, reduce the cost of model maintenance, and increase the usage and ROI of their machine learning investments.

Short Case Study

Company: ABC Healthcare

Problem:

ABC Healthcare is a healthcare provider that uses machine learning models to predict patient outcomes and make recommendations for treatment. However, the models are often complex and difficult to understand, making it difficult for doctors and patients to trust the recommendations.

Solution:

ABC Healthcare implemented Explainable AI (XAI) techniques in order to make its machine learning models more transparent and understandable. Using XAI approaches such as feature importance and local interpretable model-agnostic explanations (LIME), the company was able to provide clear and detailed explanations for the decisions made by its machine learning models.

Result:

By implementing XAI techniques, ABC Healthcare was able to improve the trust and confidence of its doctors and patients in the recommendations made by its machine learning models. This helped to improve patient outcomes and satisfaction, as well as to increase the overall effectiveness of the company’s healthcare services.

This is just one example of how Explainable AI can be used in a real-world setting. Many other companies across a wide range of industries are also using XAI to improve the transparency and accountability of their AI systems.

Companies Focussing on Explainable AI

Many companies are focusing on XAI in order to improve the transparency and accountability of their AI systems, as well as to better understand how these systems work and how they can be improved. Here are a few examples of companies that are focusing on XAI:

- Google:

Google has been actively researching and developing XAI techniques, and has implemented various XAI approaches in its products and services, including its Cloud AI Platform.

- Microsoft:

Microsoft has also been researching and developing XAI techniques, and has implemented various XAI approaches in its products and services, such as its Azure Machine Learning platform.

- IBM:

IBM has a strong focus on XAI and has implemented various XAI approaches in its products and services, including its Watson AI platform.

- Uber:

Uber has a dedicated XAI team that focuses on developing and implementing XAI approaches in its products and services, including its machine learning models.

- OpenAI:

OpenAI is a research organization that is actively focused on developing and advancing XAI techniques and technologies.

These are just a few examples of companies that are focusing on XAI. Many other companies across a wide range of industries are also exploring and implementing XAI approaches in order to improve the transparency and accountability of their AI systems.

Overall, by using XAI techniques and model monitoring together, organizations can turn their AI systems from “black boxes” into “glass boxes”, giving them a deeper understanding of how their models are functioning and how they can be improved. This can help organizations get more value from their AI investments and build trust in their AI systems.