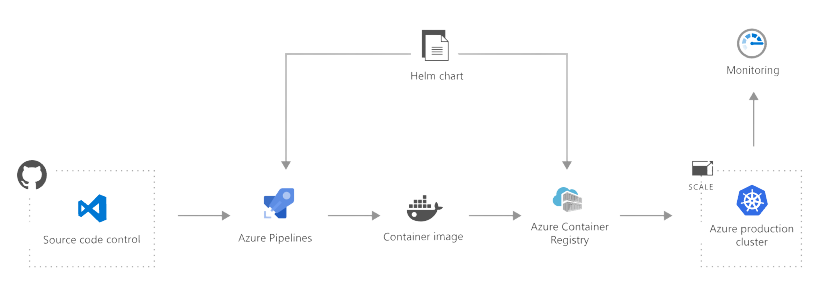

Azure Kubernetes Service (AKS) is a managed Kubernetes service offered by Microsoft Azure. It enables users to quickly and easily deploy, manage, and scale containerized applications on Azure.

Kubernetes is an open-source container orchestration platform that allows developers to automate the deployment, scaling, and management of containerized applications. It uses a declarative configuration to define the desired state of an application and continuously works to maintain that state.

With AKS, users can leverage the power of Kubernetes without having to worry about the underlying infrastructure. AKS takes care of the heavy lifting of deploying and maintaining the Kubernetes control plane, enabling users to focus on building and deploying their applications.

In this blog post, we’ll cover the following topics:

- Setting up an AKS cluster

- Deploying an application to AKS

- Scaling and upgrading the application

- Monitoring and troubleshooting the application

Setting up an AKS cluster

To get started with AKS, the first step is to create a cluster. This can be done through the Azure portal, Azure CLI, or Azure PowerShell.

Here’s an example of how to create an AKS cluster using the Azure CLI:

| |

This will create an AKS cluster with three nodes in the eastus location and generate SSH keys for accessing the nodes.

Deploying an application to AKS

Now that we have an AKS cluster up and running, we can deploy an application to it. In Kubernetes, applications are typically packaged as Docker containers and deployed as pods. A pod is the basic unit of deployment in Kubernetes, representing one or more containers that should be run together on the same host.

To deploy an application to AKS, we need to create a deployment configuration file. This file, known as a deployment.yaml, defines the desired state of the application, including the number of replicas, the container image to use, and any environment variables or secrets that the application requires.

Here’s an example deployment.yaml file for a simple node.js application:

| |

To deploy this application, we can use the kubectl command-line tool, which is included with the AKS cluster.

| |

This will show the status of the deployment, including the number of replicas and the current and desired states.

Once the deployment is complete, we can create a service to expose the application to the outside world. In Kubernetes, a service is an abstract way to expose an application running on a set of pods as a network service.

To create a service for our application, we can create a service.yaml file with the following content:

| |

To create the service, we can use the kubectl apply command again:

| |

This will create a load balancer service that exposes our application on port 80 and forwards traffic to the pods on port 8080.

To access the application, we can use the kubectl get services command to get the external IP address of the load balancer:

| |

Scaling and upgrading the application

One of the key benefits of using Kubernetes is the ability to easily scale and upgrade applications. In AKS, we can use the kubectl scale command to change the number of replicas in a deployment:

| |

This will increase the number of replicas to 5, which will cause Kubernetes to create two additional pods to meet the desired state.

To upgrade the application, we can update the container image in the deployment configuration and apply the changes using kubectl apply.

For example, if we want to upgrade to version 2.0 of our application, we can update the deployment.yaml file like this:

| |

Then, we can apply the changes using the kubectl apply command:

| |

This will update the container image in the pods and perform a rolling update, ensuring that there is no downtime for the application.

Monitoring and troubleshooting the application

To monitor and troubleshoot the application in AKS, we can use a variety of tools and techniques.

One useful tool is the kubectl logs command, which allows us to view the logs for a pod or container. For example, if we want to view the logs for the my-app container in the my-app pod, we can use the following command:

| |

Another useful tool is kubectl describe, which provides detailed information about a resource, including events and metadata. For example, if we want to view the details for the my-app deployment, we can use the following command:

| |

In addition to these tools, we can use Azure Monitor for containers to monitor the performance and health of our AKS cluster and applications.

Azure Monitor for containers provides real-time insights into the performance and health of containerized applications, including CPU and memory usage, network traffic, and error logs.

To enable Azure Monitor for containers, we can use the az aks enable-addons command:

| |

This will enable Azure Monitor for containers and create a Log Analytics workspace for storing the monitoring data.

In conclusion, Azure Kubernetes Service (AKS) is a powerful tool for deploying and managing containerized applications on Azure. With AKS, users can leverage the benefits of Kubernetes without having to worry about the underlying infrastructure, and can easily scale and upgrade their applications as needed. By using tools like kubectl and Azure Monitor for containers, users can monitor and troubleshoot their applications and ensure that they are running smoothly.